The Role of GPUs in Machine Learning and Data Processing

Graphics Processing Units (GPUs), originally designed to accelerate rendering in computer graphics, have become essential tools in machine learning and data processing. Their ability to handle parallel computations efficiently has made them indispensable in the era of big data and artificial intelligence (AI). As machine learning models grow in complexity and datasets expand exponentially, GPUs are driving advancements by providing the computational power necessary for modern applications.

Understanding GPUs and Their Strengths

GPUs are specialized processors designed to handle multiple calculations simultaneously. Unlike Central Processing Units (CPUs), which are optimized for sequential processing, GPUs excel at parallelism. This characteristic makes them ideal for tasks that involve large-scale matrix and vector operations, common in machine learning algorithms and data analysis.

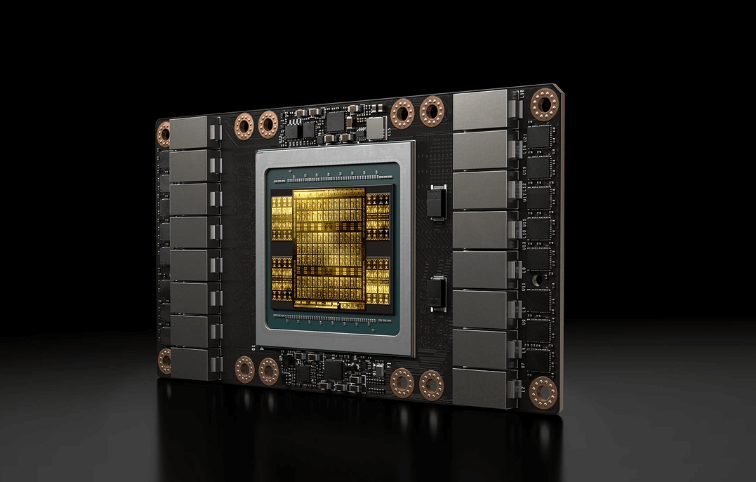

A GPU contains thousands of cores, allowing it to perform numerous computations concurrently. This architecture enables faster processing of data-intensive workloads compared to CPUs, which typically have fewer cores optimized for single-thread performance. As a result, GPUs are particularly suited for tasks that require repetitive mathematical operations, such as training deep learning models or processing large datasets.

The Importance of GPUs in Machine Learning

Machine learning, particularly deep learning, relies heavily on iterative mathematical computations, including matrix multiplications and gradient calculations. These operations are computationally expensive and can take significant time on traditional CPUs. GPUs, with their parallel processing capabilities, significantly accelerate these tasks.

During model training, GPUs process large volumes of data and adjust model parameters based on feedback from the loss function. The ability to handle these computations in parallel reduces training time, allowing researchers and developers to experiment with complex models and larger datasets. This efficiency is crucial for applications such as natural language processing, image recognition, and autonomous systems.

In addition to training, GPUs are valuable for inference, the process of making predictions with a trained model. Inference involves running the model on new data, which also benefits from the high-speed calculations GPUs provide. This capability is critical for real-time applications like recommendation systems, speech recognition, and fraud detection.

GPUs in Data Processing and Analytics

The role of GPUs extends beyond machine learning into the broader field of data processing and analytics. With the growth of big data, businesses and researchers are tasked with analyzing massive datasets to uncover insights. GPUs enhance this process by accelerating data preprocessing, feature extraction, and visualization.

For example, GPUs enable rapid data cleaning and transformation, reducing the time needed to prepare datasets for analysis. In data visualization, GPUs power tools that create dynamic, interactive graphics, allowing users to explore complex datasets intuitively. Platforms like RAPIDS, an open-source library for GPU-accelerated data science, leverage GPUs to speed up data analytics workflows significantly.

Advancements in GPU Technology

The increasing demand for high-performance computing has driven significant advancements in GPU technology. Companies like NVIDIA, AMD, and Intel are continuously innovating to enhance GPU capabilities, making them more efficient and versatile.

Modern GPUs are equipped with Tensor Cores, specialized hardware designed for deep learning tasks. These cores accelerate matrix multiplications, a critical operation in neural network training, and improve the performance of AI workloads. NVIDIA’s CUDA platform and AMD’s ROCm provide developers with tools to harness GPU power for various applications, simplifying programming and optimization.

Multi-GPU setups and GPU clusters are also becoming common, allowing users to scale computational resources. These configurations enable distributed training of machine learning models, handling enormous datasets and complex architectures that single GPUs cannot manage efficiently.

GPUs and Cloud Computing

The integration of GPUs into cloud computing platforms has democratized access to high-performance computing resources. Cloud providers such as AWS, Google Cloud, and Microsoft Azure offer GPU instances that allow businesses and researchers to leverage GPU power without investing in expensive hardware.

This accessibility has accelerated innovation in AI and data processing, enabling startups and smaller organizations to compete in data-driven industries. Cloud-based GPUs also facilitate collaboration, as teams can share resources and work on large-scale projects remotely.

Challenges and Considerations

While GPUs offer significant advantages, their adoption comes with challenges. High costs of GPUs and associated infrastructure can be a barrier for smaller organizations. Energy consumption is another concern, as GPUs require substantial power to operate, impacting both operational costs and environmental sustainability.

Optimizing machine learning and data processing workflows for GPUs also requires specialized knowledge. Developers must use frameworks like TensorFlow, PyTorch, or RAPIDS that support GPU acceleration. Understanding how to distribute workloads effectively across GPU cores is critical to achieving maximum efficiency.

The Future of GPUs in Computing

The future of GPUs in machine learning and data processing looks promising, with ongoing advancements in hardware and software. Innovations like NVIDIA’s Hopper architecture and AMD’s next-generation GPUs promise even greater performance and efficiency. The convergence of GPUs with emerging technologies like quantum computing and neuromorphic chips could further expand their role in high-performance computing.

The integration of AI-specific accelerators, such as Google’s Tensor Processing Units (TPUs) and Graphcore’s Intelligence Processing Units (IPUs), indicates that GPUs will continue to evolve to meet the demands of specialized workloads. Additionally, efforts to develop energy-efficient GPUs align with the growing focus on sustainable computing.

Conclusion

GPUs have revolutionized machine learning and data processing, enabling faster computations, enhanced efficiency, and scalability. Their ability to handle parallel tasks makes them indispensable for modern applications, from training complex AI models to analyzing massive datasets. As technology advances and the demand for computational power grows, GPUs will remain at the forefront of innovation, driving progress in artificial intelligence, big data, and beyond.